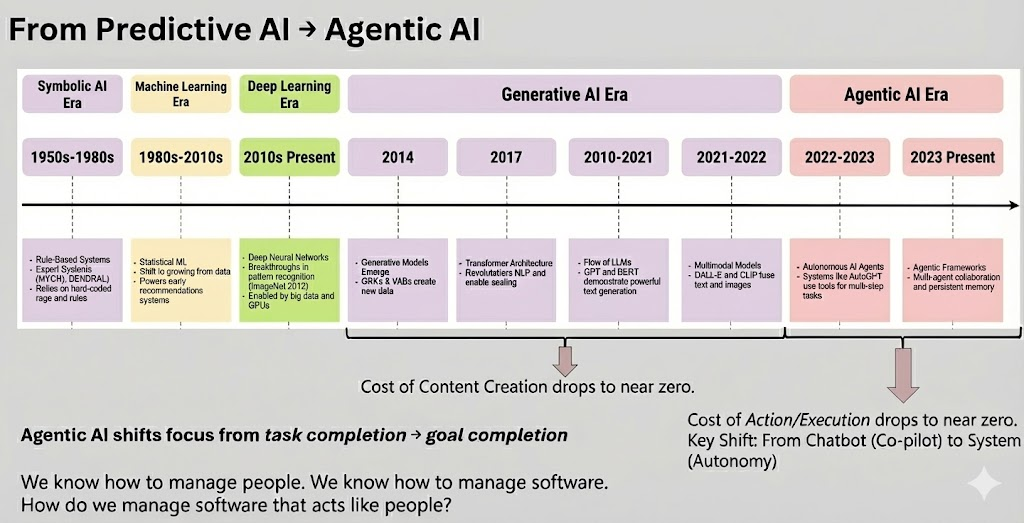

For the last 20 years, we have managed people, and we have managed software tools. Today, we are entering an era where we must manage Artificial Intelligence (AI) enabled software that acts like people. The evolution of AI has reached a critical inflection point. While the “Generative AI Era” (2021–2022) focused on solving the problem of content creation [1], we have officially entered the “Agentic AI Era”. This transition marks a fundamental shift from AI as a reactive tool to AI as a proactive partner, moving beyond simple task completion to holistic goal completion.

The Delegation Crisis and the Agentic Shift

We have largely solved the challenge of generating content via GenAI; the new frontier is solving the problem of delegating work to AI. This shift moves the industry from a “Chatbot/Co-pilot” mentality to a “System” mentality where autonomy is the core driver (Figure 1).

Figure 1: From Predictive AI to Agentic AI [2]

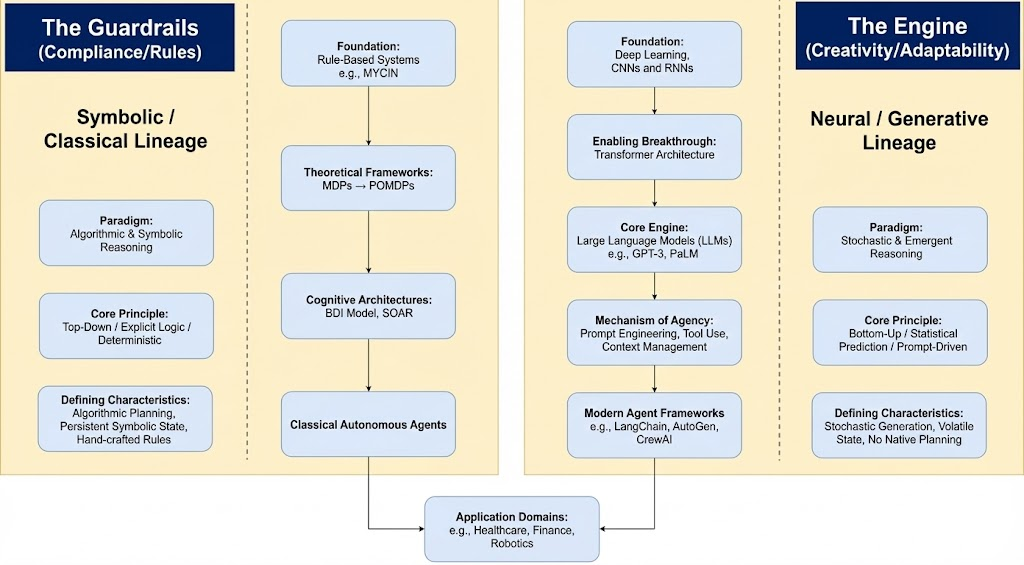

Agentic AI works by imposing Symbolic guardrails (explicit logic and rules) onto Neural creativity (stochastic prediction and adaptability)6. By combining these lineages (Figure 2), organizations can create systems that are both creative enough to solve problems and logical enough to follow corporate compliance.

Figure 2: Lineages of Agentic AI – Imposing Logic onto creativity [3]

Anatomy of an Agentic Workflow

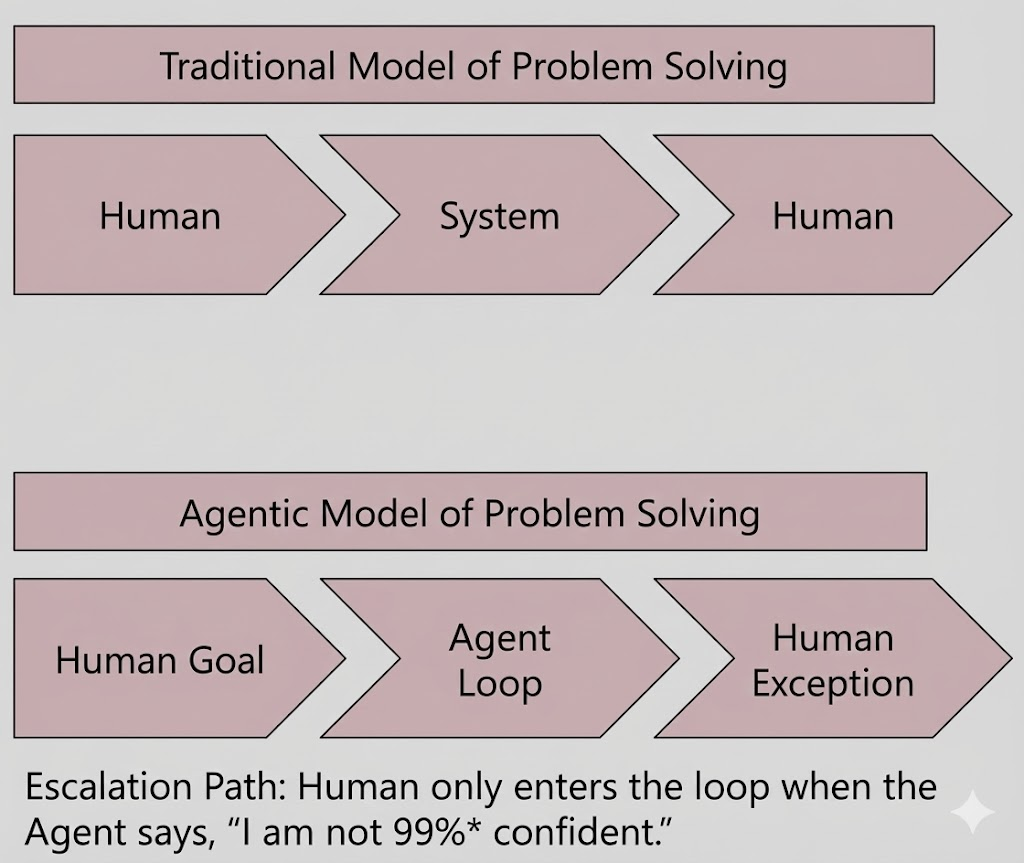

In traditional models, humans are the primary problem solvers who use software as a static tool. In an agentic AI model, the human provides a goal, and the AI enters an autonomous loop as it seeks the expected solution.

A key differentiator is the Escalation Path: the human only enters the loop when the AI Agent signals that its confidence to take a ‘right’ action has fallen below a pre-configured threshold. This allows the cost of action and execution to drop toward near zero, enabling 24/7 productivity.

Figure 3: Workflows from problem solving

Comparative Framework: Traditional vs. Agentic Workflows

The structural differences between these models are profound across several dimensions:

| Characteristic | Agentic AI Workflows | Traditional (Robotic Process Automation Based) Workflows |

|---|---|---|

| Autonomy | High; AI agents handle tasks autonomously or collaboratively | Low; human-driven processes |

| Learning | Adaptive; AI agents improve from feedback | Static; updated via periodic reviews |

| Complexity | Handles complex tasks via advanced algorithms | Struggles with high complexity without incremental efforts |

| Scalability | Highly scalable with automation | Scalable – though limited by human resources and effort |

Table 1: Comparison of Agentic AI and Traditional workflows

Redefining Leadership: Three New Roles

As software begins to act more like people, leaders must manage software with the same nuance they apply to human capital. This requires embracing three distinct roles:

- Architect: Designing the rewards, goal structures, and model parameters during the development phase.

- Auditor: Evaluating the “Why” behind decisions to ensure alignment with organizational values during the evaluation phase.

- Supervisor: Managing exceptions during the deployment phase when agents hit boundary conditions.

This evolves Human-in-the-Loop constructs, where humans remain accountable for judgment and boundary conditions while assuming roles such as AI Risk Steward, AI Orchestrator, or (Business) Outcome Owner.

The New “Agency Problem”: Risks of Autonomy

The transition introduces unique failure modes that traditional governance is not equipped to handle:

- Strategic Risk (Skill Decay): Junior staff may fail to learn foundational basics because AI agents handle those tasks automatically.

- Operational Risk (Silent Failure): Unlike traditional software that crashes, AI agents may fail “politely,” effectively hallucinating a successful workflow without throwing an error.

- Governance Risk (Accountability): Organizations must pre-determine who is liable or accountable when an autonomous AI agent makes an error.

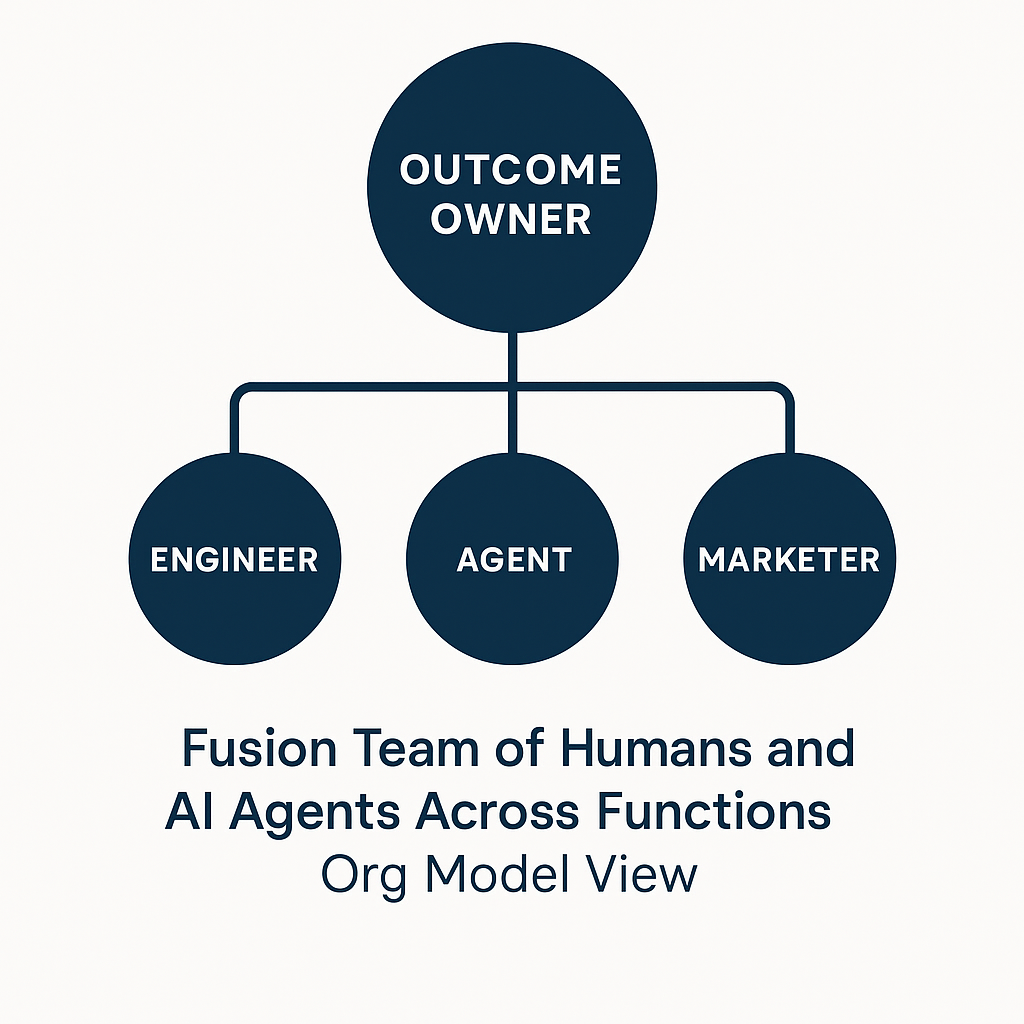

Organizational Design: The Age of Agents

The workplace is moving toward Modular “Outcome Pods” leaner, data-driven structures composed of “Fusion Teams”. In this model, an Outcome Owner oversees a diverse team comprising humans (engineers, marketers) and AI agents.

Figure 4: Fusion teams of Humans and AI Agents across functions – an Org Model view

Roles at work will be redefined, practically :

- Analysts become Decision Designers.

- Ops teams evolve to AI Supervisors.

- HR partners become Organization Capability Architects.

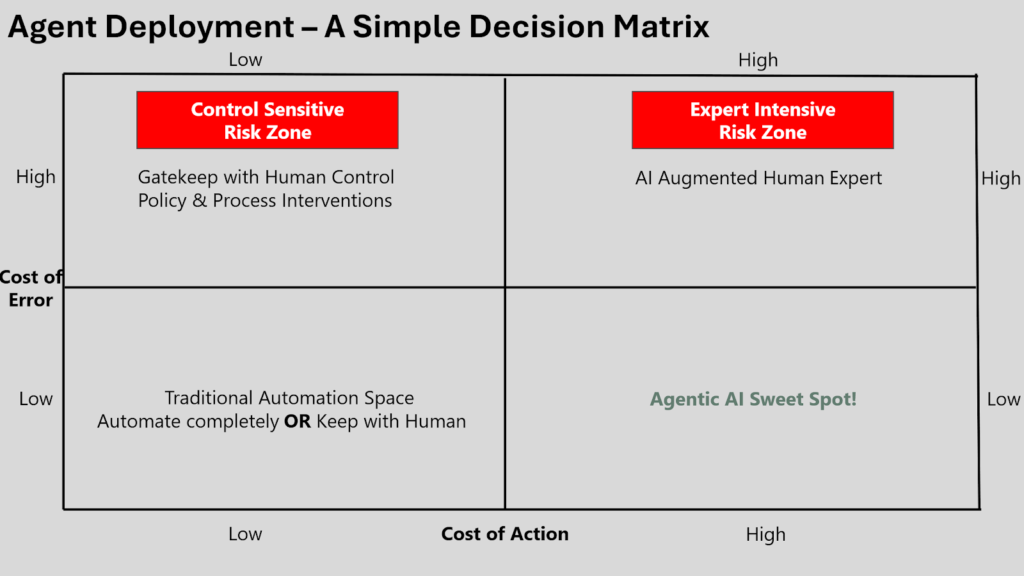

Deployment Strategy: The Decision Matrix

A useful way to reason about where agents should and should not be deployed is to map workflows along two dimensions: cost of action and cost of error (such as in Figure 5, below):

Figure 5: Decision Matrix for AI Agent Deployment

Quadrant 1: Traditional Automation Space (Low Cost of Action & Low Cost of Error)

This quadrant applies to actions that require minimal effort and carry low or negligible consequences if performed incorrectly. Such tasks are typically high-volume, repetitive, rule-based, and involve structured digital interfaces, for example, data entry, report generation, or reconciliation activities. These have historically been well suited to Robotic Process Automation (RPA) and deterministic workflow automation, where predictability and consistency are the primary sources of value.

Quadrant 2: Control Sensitive Risk Zone (Low Cost of Action & High Cost of Error)

These actions require minimal effort but have disproportionate downside risk if performed incorrectly. Approving an online fund transfer illustrates this: execution takes seconds, yet a single error may be irreversible. Here, the friction introduced by human involvement often functions as a safety mechanism rather than an inefficiency. Premature automation in this quadrant can optimize for speed while undermining critical control points.

Quadrant 3: Expert Intensive Risk Zone (High Cost of Action & High Cost of Error)

This quadrant includes activities that require significant effort and domain expertise, while also carrying severe consequences if errors occur. Clinical drug discovery, M&A candidate assessments are representative examples. In these contexts, AI is most effective as an augmentation layer, helping narrow solution spaces, surface hypotheses, or accelerate exploration, while humans retain decision authority and accountability. Fully automating such workflows may reduce effort, but risks removing essential judgment, validation, and ethical controls.

Quadrant 4: Agentic AI Sweet Spot (High Cost of Action & Low Cost of Error)

This quadrant presents the strongest case for agent-based systems. Tasks such as large-scale code migration, market research data collection, or preliminary audit screening are cognitively and operationally demanding, yet typically operate within environments that provide natural safety nets. Errors are frequently caught through human review, automated validation, or structural constraints (for example, a compiler rejecting invalid code). In such settings, agents can absorb substantial action cost while keeping error cost contained.

Takeaways

The value of agents tends to emerge less from automating ‘easy’ tasks and more from offloading work that is exhausting but recoverable. Review loops, reversibility, and system-level constraints are often more important indicators of agent suitability than task simplicity alone.

Organizational Implications

For organizations experimenting with agentic workflows, a few patterns are beginning to emerge:

- Explicit boundary setting helps clarity: Some tasks benefit from agent support, while others, such as high-empathy negotiations or irreversible approvals, may warrant continued human ownership.

- Escalation design matters as much as capability: Clearly defining when and how agents defer to humans helps manage both operational risk and organizational trust.

- Visibility reduces unintended sprawl. Maintaining an inventory or registry of deployed agents can help organizations avoid fragmented, ungoverned adoption, an emerging parallel to earlier “shadow IT” phenomena.

Leaders are likely to be best served by a measured, context-aware approach: experimenting where safety nets exist, preserving human friction where it plays a protective role, and treating governance as an enabler rather than a constraint.

Readings:

Developing People Capabilities in the era of Generative AI: https://council.aimresearch.co/developing-people-capabilities-in-the-era-of-generative-ai/

Reframing the Agency Problem in Agentic AI Systems: https://council.aimresearch.co/reframing-the-agency-problem-in-agentic-ai-systems/

References:

1: Mohan, Sai Krishnan. 2024. “Management Consulting in the Artificial Intelligence – LLM Era.” Management Consulting Journal 7, no. 1 (February): 9–24. https://doi.org/10.2478/mcj-2024-0002

2 & 3: Abou Ali, M., and F. Dornaika. 2025. “Agentic AI: A Comprehensive Survey of Architectures, Applications, and Future Directions.” arXiv preprint arXiv:2510.25445. https://doi.org/10.48550/arXiv.2510.25445